Digital Video Basics

Digital video involves capturing, processing, compressing, storing, and transmitting moving visual images in digital form. Understanding digital video requires knowledge of several key concepts, including chroma subsampling, bit depth, and color spaces such as YCbCr and RGB. These elements are crucial for balancing video quality with bandwidth requirements, particularly in video transmission.

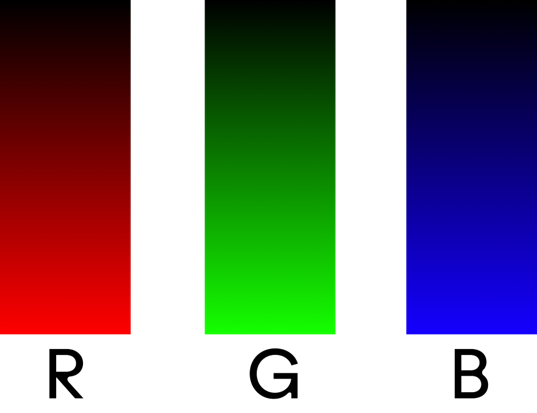

Color Space: YUV vs RGB

Color space refers to the method of representing the range of colors. YUV and RGB are two primary color spaces used in digital video.

RGB is based on the primary colors of light (Red, Green, and Blue) and is used primarily in devices that emit light directly, like computer monitors, TVs, and cameras. It represents colors by combining these three colors at various levels of intensity, suitable for applications where precise color representation is crucial.

Color space refers to the method of representing the range of colors. YUV and RGB are two primary color spaces used in digital video.

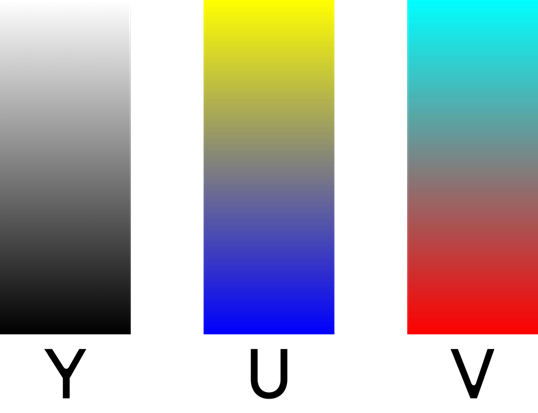

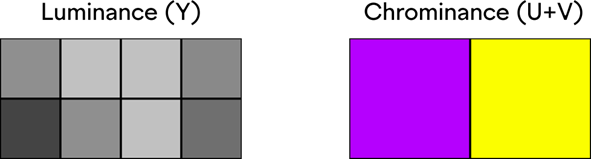

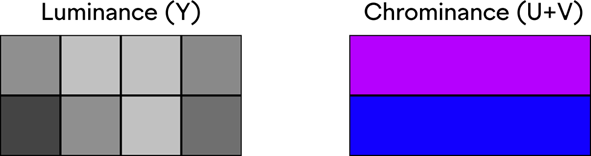

In the YUV color space, the Y component represents the luminance, or brightness, of the color, which is essentially a grayscale representation of the image. The U and V components represent the chrominance, or color information, separate from the luminance. Specifically: U (Chrominance-B): The U component indicates the difference between the blue component and the reference luminance (Y). Essentially, it represents the blue projection of the color minus its luminance. This helps in determining how blue or how opposite of blue (which can be interpreted as yellowish) the color is. V (Chrominance-R): The V component indicates the difference between the red component and the reference luminance (Y). It represents the red projection of the color minus its luminance. This component helps determine how red or how opposite of red (which can be interpreted as greenish) the color is.

The U and V components do not directly correspond to specific colors but rather to the chromatic difference from the luminance. By adjusting these components, you can shift the hue and saturation of a color. The Y component ensures that the brightness of the color is maintained independently of its hue and saturation, which is particularly useful in broadcasting and video compression technologies where luminance is more critical to the perceived quality of the image than color details. This separation allows for more efficient compression by reducing the resolution of the U and V components relative to the Y component, exploiting the human visual system's lower sensitivity to fine details in color compared to brightness. To make it simple the U component in the YUV color space essentially represents the color spectrum between blue and its complementary color, which can be seen as a range from blue to yellow-green. This range is not about moving directly from blue to red but rather moving from blue towards green and red, where the midpoint might represent less saturation or a neutral point where the blue influence is minimized. Similarly, the V component represents the spectrum between red and its complementary colors, moving from red towards blue and green, where its midpoint could represent a neutral point with minimized red influence, potentially leaning towards cyan or green.

In essence, the U component controls the blue-yellow balance, while the V component controls the red-cyan (or red-green) balance. By adjusting these two components along with the Y (luminance), you can navigate through the color space to represent a wide range of colors.

The choice between YUV and RGB in a digital video workflow depends on the application's requirements. YUV is typically used for video compression and transmission, where bandwidth efficiency is paramount. In contrast, RGB is used in contexts where accurate color representation and direct control over each primary color are needed, such as in content creation and display technologies.

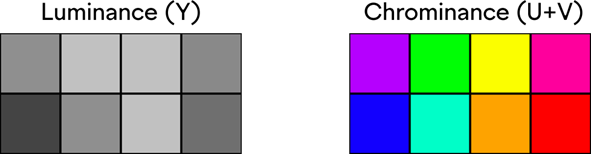

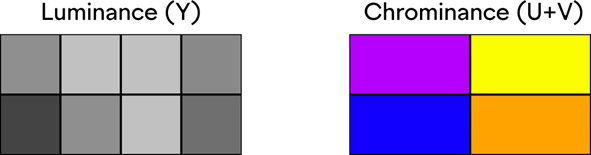

Chroma Subsampling

With YUV color space it is possible to use the chroma subsampling as a method to spatially reduce the color information in a signal while retaining the luminance data. This technique leverages the human visual system's characteristic of being more sensitive to variations in brightness (luminance) than to color (chrominance). By reducing the amount of color information, chroma subsampling significantly lowers the bandwidth and storage requirements for video data without substantially impacting perceived image quality. Video signals consist of luminance information, which represents the brightness levels, and chrominance information, which represents the color. Chrominance is further divided into two components, U and V Chroma subsampling is expressed in a notation like 4:2:2, 4:2:0, 4:4:4, etc. where the first number (4 in these examples) refers to the reference number of luminance samples in the first row of a 2x2 block of pixels.

The second number indicates the number of chrominance samples (U and V) in the first row of pixels, showing how many color samples are taken compared to the luminance samples. The third number indicates the number of chrominance samples in the second row of pixels.

4:4:4 subsampling means that no chroma subsampling is applied. Every pixel has its own color information, resulting in the highest quality but also the largest bitrate.

4:2:2 subsampling reduces the color information by half horizontally but keeps full-color information in the vertical direction. It's a common compromise between quality and bandwidth used in professional video environments.

4:2:0 subsampling reduces the color information by half both horizontally and vertically, which is widely used in consumer video formats (e.g., Blu-ray, DVD, streaming media PTZ and Prosumer Cameras) because it significantly reduces the bitrate with minimal visible loss of quality.

4:1:1 subsampling reduces the color information by a quarter of the horizontal resolution and keeps full-color information in the vertical direction.

Alpha Channel:

The YUVA color space is an extension of the YUV color space that includes an Alpha channel. In color spaces, the Alpha channel represents the opacity of a color, allowing for varying levels of transparency and compositing images over one another.

The alpha channel sampling is usually the same as the luminance and is expressed in a notation like 4:2:2:4, 4:2:0:4, 4:4:4:4, etc.

Bit Depth

Bit depth, also known as color depth, refers to the number of bits used to represent the color of a single pixel in a digital video. Higher bit depth allows for more colors to be displayed, resulting in more detailed and nuanced images. Common bit depths include:

8-bit: Capable of displaying 256 shades per channel (YUV), resulting in 16.7 million colors in total.

10-bit: Can display 1,024 shades per channel, offering over a billion colors. This greater range allows for finer gradients and more detailed color representation, reducing banding in video images.

Last updated

Was this helpful?